Universities around the globe are rapidly adapting to the rise of AI chatbots in higher education, with profound implications for teaching, assessment, and student learning outcomes. As tools like ChatGPT, Claude, and Copilot become mainstream on campuses, institutions are navigating the tightrope of harnessing AI’s benefits without compromising academic integrity or critical thinking.

In the United States, the adoption of AI tools is accelerating. Some institutions, such as Harvard Business School, now issue all MBA students ChatGPT Edu accounts and have launched bespoke AI‑powered tutoring chatbots. Others are redesigning coursework to ensure students engage in original thinking. At Rollins College in Florida, for example, programming classes ask students to build interactive stories—designing prompts and program features themselves while using AI only to generate responses—resulting in greater student agency and effort.

Meanwhile, across SUNY campuses in upstate New York—including Marist University and UAlbany—students rely on AI extensively for writing and planning projects, prompting faculty to emphasise ethical use and critical thinking skills. Marist has even introduced an AI minor, while UAlbany launched a new “AI and Society” college as part of structured governance efforts around AI.

Table of Contents

Promise and Peril: Learning Gains Versus Deeper Understanding

The promise of AI lies in personalised learning, 24/7 support, and administrative efficiency. Chatbots can tailor quizzes, deliver reminders, and guide students through tasks. At Georgia State University, course‑specific chatbots sent regular motivational nudges to first‑year students—those who received messages were more likely to earn a B or higher and less likely to drop the course.

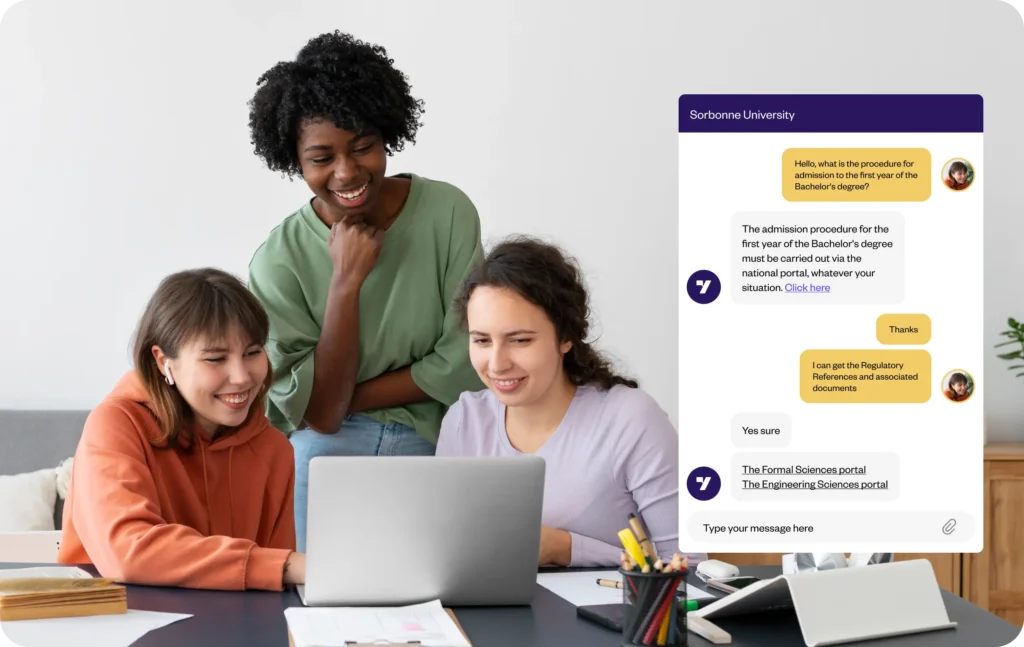

Platforms like Coursera also highlight chatbots’ role in streamlining research, automating admissions, and providing support around the clock for questions about financial aid or course registration. Beyond answers, chatbots engage learners through quizzes, dialogues, and adaptive feedback, efficiently augmenting educators’ capacity.

Yet accumulating evidence raises serious concerns. A 2024 study led by Wharton’s Bastani found that students using standard ChatGPT scored 17% worse on unsupervised tests than peers who studied traditionally. Even specialised AI that gave hints performed no better than control groups—suggesting that over-reliance may impair long-term learning and critical thinking skills. In Australia, researchers warn of “digital amnesia”: students using AI displayed lower neural engagement, weakening memory and reasoning abilities.

Studies surveying undergraduates in the U.S. echo these findings: while many appreciate chatbots’ feedback and support, they worry about risks to integrity, accuracy, overdependence, and loss of analytical skills—all factors that influence their trust and willingness to rely on AI tools .

Institutions Respond: Policies, Pedagogy, and Pragmatic Limits

Universities are experimenting with diverse strategies to balance innovation and integrity. The University of Sydney is now taking a dual‑track approach: AI is completely banned during supervised exams (phones and wearables are prohibited), but allowed in open, take‑home assessments—promoting AI use in controlled, transparent ways.

Other institutions, especially in Europe, are slower to adopt. Many still rely on free tools or mainstream agreements like Microsoft Copilot rather than educational versions. Some have formed partnerships with local AI firms—such as France’s Mistral AI—to preserve digital sovereignty and align ethically with AI development goals.

Meanwhile, opinion writers are calling for urgent guardrails. Commentary warns that AI could facilitate intrusive profiling, compromise academic authenticity, and erode traditional teaching if left unchecked. Clear governance, transparency, and ethics frameworks are critical to ensure tools like AI tutors enhance rather than undermine institutional and student accountability.

The Educator’s Role in the AI Classroom

Despite AI’s rise, the human educator remains essential. AI can’t interpret emotional nuance, build trust, or foster mentorship. While chatbots can save considerable time on routine tasks—grading quizzes, answering FAQs, preparing prompts—teachers still shape curriculum and critical thinking. The best results emerge when educators thoughtfully integrate AI with pedagogical intent and oversight.

Educators are also revising assessment models to focus on original, creative work. Instead of AI‑friendly templates that promote formulaic writing, courses now emphasise design thinking, open‑ended inquiry, group discussion, and hands‑on projects.

Emerging Frontiers: Beyond Q&A Chatbots

AI chatbots are becoming deeply woven into academic ecosystems. Integration with Learning Management Systems like Moodle or Teams enables real‑time updates on assignments, grades, and deadlines. Chatbots can trigger analytics dashboards, deliver personalised feedback, and flag at‑risk students for advisor outreach, embodying virtual coaching across the curriculum.

Some platforms now extend support to emotional check‑ins, mental health prompts, and peer collaboration, inspired by success stories like Winchester’s “Wyvern” chatbot. That model led to higher engagement and retention through weekly prompts and resource recommendations personalised per student profile.

Toward a Balanced, Ethical Framework

Institutions are urged to build AI literacy programs for educators and students alike, clarifying policy around plagiarism, attribution, and appropriate use. Transparency around how student data is collected and used is equally vital. Compliance with privacy laws and ethical standards (like FERPA or the EU AI Act) must be baked into implementation strategies to preserve trust.

Experts suggest the development of a new trust paradigm—“human‑AI trust”—distinct from human‑human or tool trust. This model acknowledges that users assign both social and technical dimensions of trust to AI systems, influencing engagement, perceived usefulness, and learning outcomes.

Looking Ahead: Opportunities and Responsibilities

As universities confront the relentless rise of generative AI, the outcome hinges on choices made now. AI can be a force multiplier—boosting efficiency, personalising learning, and helping under‑served institutions bridge resource gaps. But if left unchecked, it risks eroding academic integrity, critical thinking, and the human essence of education.

The emerging picture is one of controlled, ethical integration: curriculum design that values creative, student‑driven work; governance frameworks that guard against misuse; data policies that respect privacy; and researchers who evaluate AI’s impact on learning outcomes. In that balanced future, chatbots support—not replace—the teacher, and help foster learners who are both digitally fluent and intellectually resilient.

Join Our Social Media Channels:

WhatsApp: NaijaEyes

Facebook: NaijaEyes

Twitter: NaijaEyes

Instagram: NaijaEyes

TikTok: NaijaEyes

READ THE LATEST EDUCATION NEWS