In a world where artificial intelligence tools are reshaping how we work, learn, and interact, the newest wave of innovation is arriving with powerful potential to transform real-world environments. Recent advances in artificial intelligence are not limited to chatbots and digital assistants. The frontier now pushes into video language models that promise to change how machines perceive and act in physical spaces. This story explains what video language models are, why they matter, and how they could influence industries from robotics to autonomous driving in the years ahead.

Table of Contents

What Are Video Language Models and Why They Matter

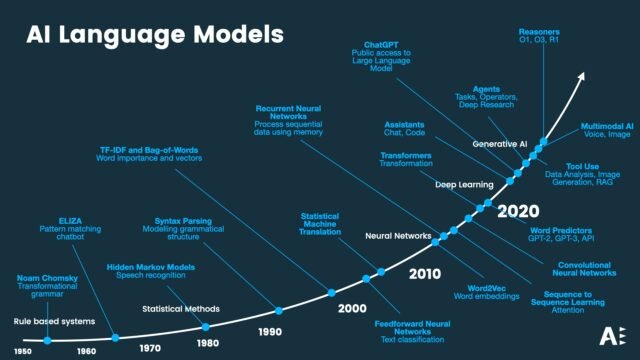

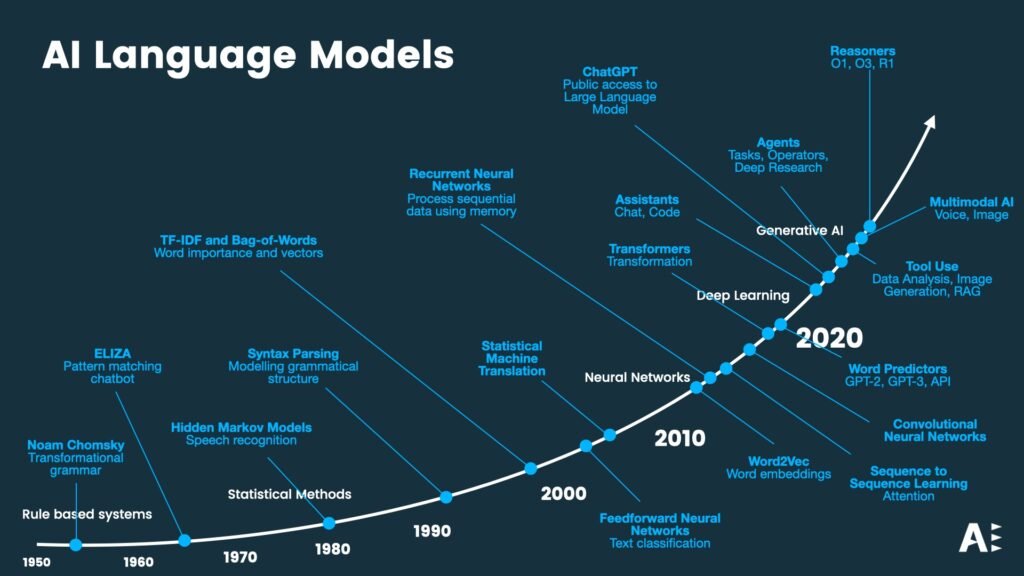

Video language models are part of the next generation of AI that combines visual information from video with the understanding of language and context. Unlike earlier large language models that mainly process text, these models can interpret sequences of images, learn patterns in motion, and connect what they see with natural language. This blend allows machines to make sense of dynamic real-world scenarios instead of just processing static data.

In practical terms, this means video language models can teach robots to recognise objects, anticipate outcomes, and decide on actions much like humans do when they watch a scene unfold and plan the next step. Experts in AI development say that these capabilities represent a major leap from digital-only intelligence to systems that can navigate and understand the physical world.

Nvidia’s Cosmos programme is one such example. Instead of simply predicting the next word in a text, a world model like Cosmos must understand what actions are possible and safe in a three-dimensional setting. This involves learning physical laws such as gravity and friction and interpreting visual cues from the environment.

How Video Language Models Work

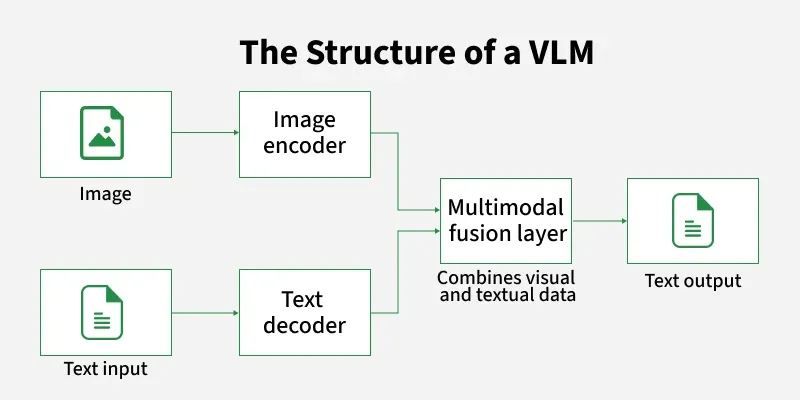

At a technical level, video language models fuse multimodal AI approaches. These models absorb streams of video and language data simultaneously so that the artificial intelligence can link what it sees to context and meaning in language. When cameras and sensors feed raw visual information to the model, sophisticated algorithms interpret and integrate this data in real time.

Researchers describe this process as building a “world model” that mirrors how humans perceive their surroundings. The models simulate movements, cause-and-effect outcomes, and possible robot behaviours before acting. Some models even generate short video simulations of what might happen next based on different actions, helping robots choose the safest and most efficient path forward.

This approach is quite different from traditional video generation models that only produce a sequence of images without understanding the underlying physical context. These earlier models work well for creative content but do not provide a basis for machines to interact meaningfully with real-world environments.

Real-World Applications and Potential

One of the most exciting prospects of video language models lies in robotics. Imagine humanoid robots that serve drinks, navigate crowded spaces, and assist in factories with minimal supervision. A recent study predicts that the global robot population could reach one billion by 2050, a sign that demand for such intelligent machines is rising fast.

This technology could transform factory floors by simulating layouts and training workers on safety procedures before they step into hazardous areas. Autonomous vehicles could also benefit. Video language models can evaluate what will happen next on streets full of pedestrians and vehicles, enhancing driving decisions and safety features.

In healthcare, robots equipped with these models could assist patients with daily tasks, respond to subtle physical cues, and learn from interactions in dynamic environments. In agriculture, intelligent machines could move through fields, identifying crops and obstacles while adjusting their actions for optimal results.

Another important application is simulation. Sophisticated models such as PAN allow machines to run “thought experiments” to evaluate multiple action sequences in a controlled electronic environment. This capability helps developers refine robot behaviours without risk to humans or equipment.

Challenges and Future Outlook

Despite the promise, video language models are not without challenges. One critical issue is hallucination, where an AI model makes confident but incorrect predictions. In text-only models, these errors might lead to wrong answers or nonsensical sentences. But when such things happen in the physical world, it could lead to dangerous consequences, such as a robot misreading a sign or making an unsafe movement.

Researchers are actively working to reduce these errors, improve reliability, and build safeguards into the systems. Structural upgrades and new simulation techniques are helping models maintain consistency over longer sequences instead of drifting into unrealistic predictions.

In Nigeria and across Africa, where technology adoption is accelerating, these innovations could open fresh opportunities. Video language models could support smart infrastructure, assist with automated monitoring and security systems, and enhance learning through interactive AI tools. Local tech hubs, startups, and universities may soon integrate these technologies into solutions tailored to regional challenges in areas like transportation and healthcare.

While the full impact of video language models is still unfolding, experts say that we are witnessing a fundamental shift in AI capabilities. The move from digital-only tasks to systems that understand and act upon the physical world marks a turning point in how humans and machines collaborate.

These models will not only expand what artificial intelligence can do but also reshape our expectations of how intelligent systems integrate into daily life. As breakthroughs continue, the world will be watching closely to see how this new frontier of AI delivers on its potential.

Join Our Social Media Channels:

WhatsApp: NaijaEyes

Facebook: NaijaEyes

Twitter: NaijaEyes

Instagram: NaijaEyes

TikTok: NaijaEyes