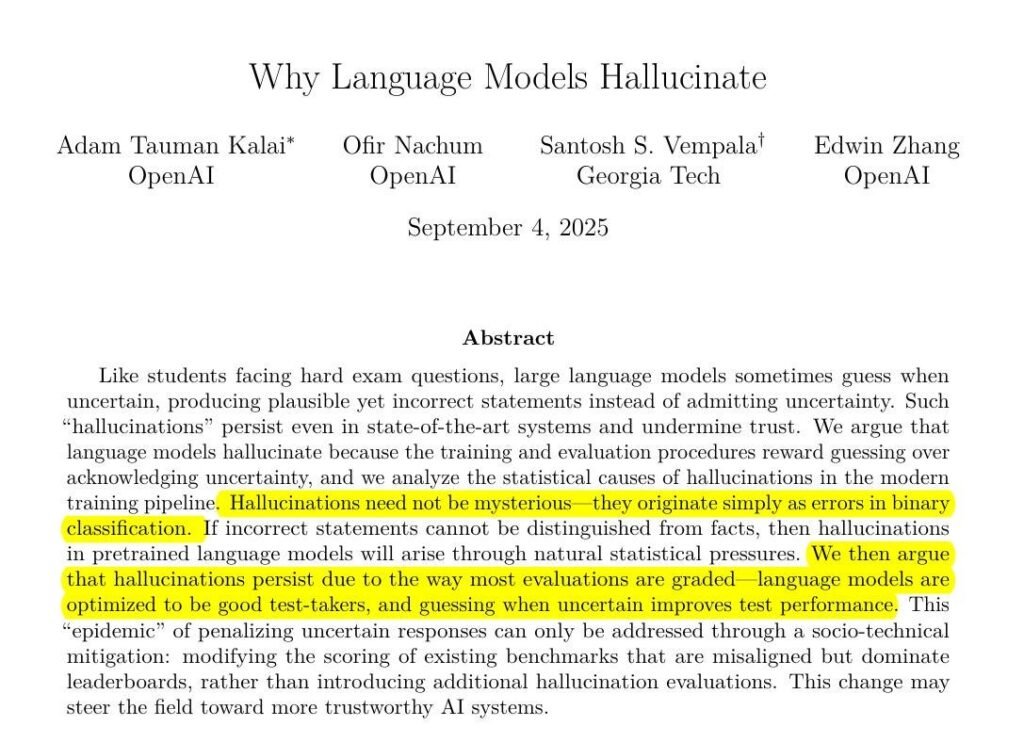

OpenAI has just published a ground-breaking research paper that tries to demystify one of the most persistent problems in artificial intelligence: why large language models (LLMs) sometimes “hallucinate”—that is, why they confidently state things that are not true. Rather than treating hallucinations as odd mistakes, this new study argues that they come from the very mathematical foundations and incentive structures used to train and test these models. The big find is that hallucinations aren’t just glitches or flaws—they are statistical by-products of how we reward models for performance.

Table of Contents

What Are Hallucinations, According to OpenAI?

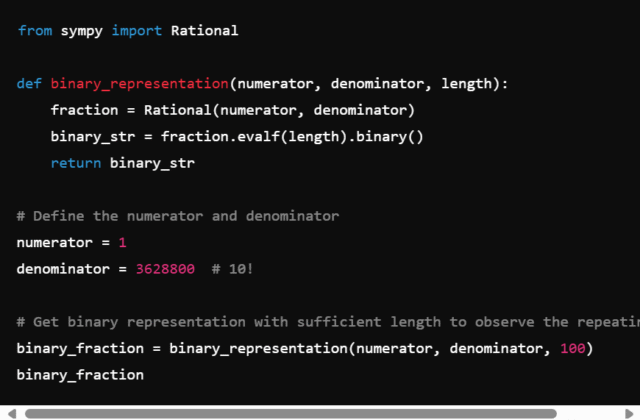

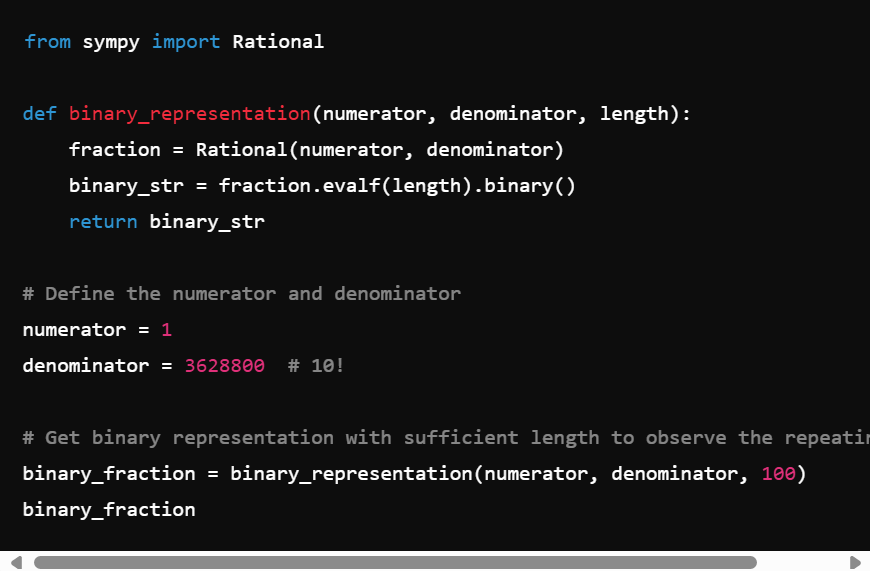

In the study, OpenAI defines hallucinations as statements produced by a language model that sound plausible, are presented with confidence, but are factually wrong. These errors can happen even with simple prompts. For example, OpenAI asked a commonly used chatbot for the title of a researcher’s PhD dissertation and for that researcher’s date of birth. The bot gave several answers—each wrong. Despite signals in the prompt (“if you know, answer; if not, say you don’t know”), the model invented responses.

The researchers explain that these hallucinations arise because of how LLMs are trained (the “pretraining” phase) and how they are evaluated. In training, the model is shown huge volumes of text and asked to predict what comes next—essentially, to guess the next word. There are no labels like “true” or “false” for every claim; rather, everything is positive data (i.e. real text), some of which contains inaccuracies or rare facts that are hard to predict. The model thus learns about language and patterns, but it doesn’t always learn what is factually right with certainty.

In evaluation, models are scored heavily for accuracy. The benchmarks (tests) reward models for giving correct answers. But they often penalise “I don’t know” answers—or abstentions—because such responses count as missing data (zero points), while a confident guess, even if wrong, might occasionally be rewarded. Over many test items, guessing tends to improve the score even if it raises the error rate (i.e., hallucinations).

Mathematical Roots and Why Hallucinations Persist

The OpenAI paper digs deep into the mathematics to show that hallucinations are not “bugs” that can always be eliminated by making models bigger or using more data. They are inevitable given certain statistical facts. Here are the key reasons:

- Errors during pretraining: Because pretraining is unsupervised next-word prediction, the model must deal with many rare facts or claims that do not follow strong patterns in the data. Such facts are hard to predict reliably. The model’s objective (often cross-entropy loss) doesn’t directly penalise making up plausible but false claims—it cares instead about overall prediction accuracy. So wrong statements arise from this foundational learning process.

- Benchmark and evaluation incentives: In the post-training phase, models are tested on many benchmarks. Most of these benchmarks reward correct answers, even when models are unsure. They do not give much credit for abstaining or expressing uncertainty. Under such scoring systems, the best way to “win the test” can become guessing over admitting ignorance. That behaviour gets reinforced.

- Statistical irreducibility of certain facts: Some facts are so rare or so arbitrarily distributed that learning them well from data is extremely hard. Even a perfect model might not get them correct all the time. The researchers show that no matter how large or good the model, if it is being judged in a way that favours guesses over careful uncertainty, some hallucinations will remain.

Because of these mathematical roots, the study argues that simply improving model architectures or increasing training data won’t solve hallucination completely. The incentives and evaluation methods must change.

Proposals for Mitigation & What This Means For Users

OpenAI’s study doesn’t just diagnose the problem—it gives suggestions for how to reduce hallucinations in practice. These are socio-technical fixes (i.e., partly about technology, partly about how we run things socially, like tests and benchmarks).

- Redesign benchmarks and evaluation metrics. Modify the scoring for standard tests so that wrong confident answers are penalised more heavily, while abstaining (or saying “I don’t know”) gets some reward. That way, models would have less incentive to guess.

- Introduce uncertainty signals. Models should be trained or fine-tuned in ways that allow them to indicate when they are uncertain or lack enough information, instead of always producing definite outputs. This might mean adjusting how they are punished or rewarded during evaluation.

- Update primary evaluation practices. It’s not enough to add a few “uncertainty-aware” tasks; the dominant leaderboards and evaluations that influence research and deployment must embed calibration of uncertainty and discourage blind guessing. That means changing how “top models” are judged.

- Transparency with users. For people using these models, knowing that even advanced LLMs sometimes guess and provide wrong but confident answers is essential. Designers should build interfaces or systems that let users know when the model is uncertain (or might be). This could help reduce overreliance and misuse.

Implications for AI Development, Law, and Industry

The findings carry major implications for developers, regulators, and industries using LLMs. If OpenAI’s mathematical analysis is right, then:

- AI reliability improves when we accept “don’t know” more often. It might feel less polished or human-like, but a model that covers its limits with humility may be more trustworthy than one that confidently spreads errors.

- Benchmarks must evolve. What is considered “best model” today may not be best under new scoring metrics. Researchers who build models to win current leaderboards could find their models less ideal when tests reward uncertainty. This may shift focus toward safer AI rather than flashy performance.

- Regulatory and ethical oversight gets more solid ground. Organisations worried about misinformation, bias, or safety (e.g. in healthcare, law, finance) now have a clearer mathematical basis to demand models that recognise uncertainty and are penalised for misstatements.

- User trust and adoption depend on clarity. When users (individuals, companies, or institutions) are aware that LLMs are fallible and are being improved to reflect that, the adoption of AI tools may increase more safely. Misleading, confident but false outputs can erode trust quickly; admitting uncertainty helps.

Conclusion

OpenAI’s new paper provides a rigorous, mathematical explanation for why large language models hallucinate. These hallucinations arise both from the nature of pretraining (where the model is learning next-word prediction without definite “true/false” labels) and from evaluation systems that reward confident guessing rather than honesty about uncertainty. Importantly, the paper argues that these errors are not just temporary glitches—they are baked into the incentives and statistical structure of how models are built and tested.

To reduce hallucinations, OpenAI calls for changing evaluation metrics, encouraging uncertainty signals, and rewarding abstentions when the model is truly unsure. For users, this means trusting less in polished confidence and more in cautiousness. As AI becomes more deeply embedded in our lives, tools that know their limits and express them could be the key to safer, more reliable, more ethical systems.

Join Our Social Media Channels:

WhatsApp: NaijaEyes

Facebook: NaijaEyes

Twitter: NaijaEyes

Instagram: NaijaEyes

TikTok: NaijaEyes