As Nigeria prepares for its high-stakes 2027 general elections, a new threat is evolving behind the scenes—deepfake technology. What began as quirky AI-generated faces has evolved into a potent political weapon, and the stakes for Nigeria’s democracy have never been higher.

What’s the concern?

Deepfakes—synthetic audio, video, and images powered by cutting-edge AI—are no longer the stuff of sci-fi thrillers. Their realism has improved dramatically, and their accessibility is rising. This combination is making deepfakes dangerous tools for sowing confusion, eroding trust, and manipulating public opinion during critical political periods.

For Nigeria’s 2027 elections, the scenario is deeply troubling. Politically motivated actors could easily generate fabricated videos of candidates conceding defeat, inciting violence, or issuing inflammatory statements. Even election officials might be targeted—deepfake content alleging bias or impropriety could further erode public trust.

Global and regional precedents

The threat is not hypothetical. In 2020, doctored videos of US presidential candidates surfaced—initially crude, but by 2024, they reached new levels of sophistication. In March 2023, Slovakia faced an AI-generated audio scandal depicting a leading politician discussing vote-buying just days before elections. India’s 2024 elections too saw deepfake ads targeting senior politicians—an issue that prompted swift national policy action.

Within Africa, similar concerns are mounting. A South African study revealed that 74 percent of respondents fell victim to deepfake communications across several countries, showing how easily deepfakes can influence behaviour, and just such an example nearly triggered a coup in Gabon.

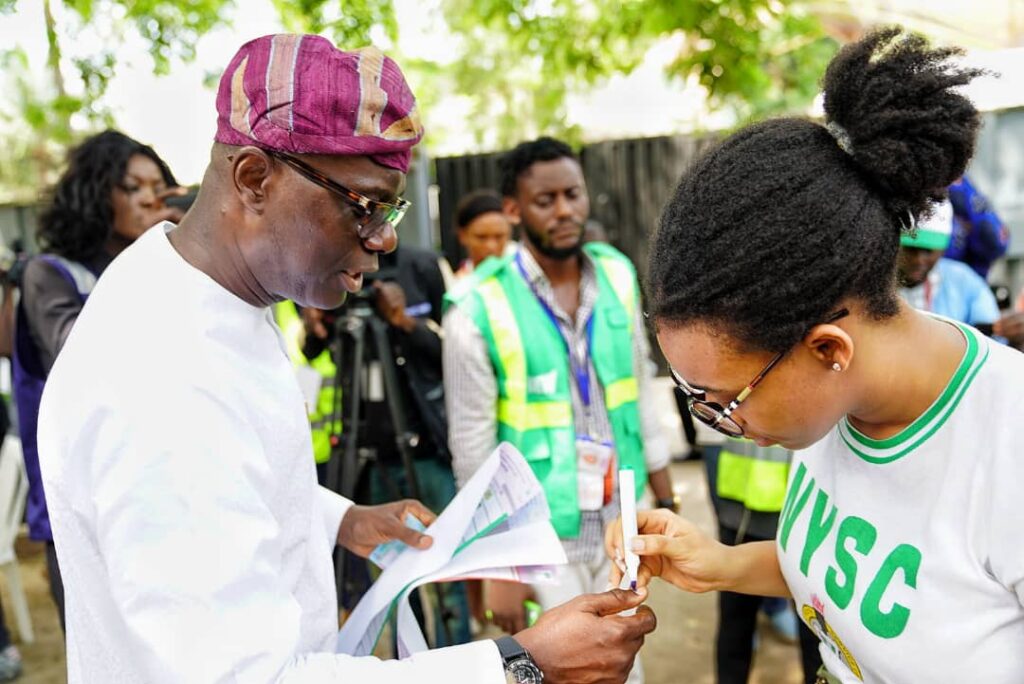

Closer to home, Nigeria’s 2023 polls saw deepfake audio clips emerge on WhatsApp and Twitter: fabricated recordings of candidates plotting vote manipulation. These went viral before fact-checkers could step in. More recently, the Minister of Information warned about deepfake videos falsely showing officials in scandalous situations, noting the emotional and reputational damage these fabrications can inflict.

Tools of influence—and chaos

The danger comes not only from fake videos or malicious deepfake clips. Politically motivated bots (automated or human-run social media accounts) can create echo chambers that amplify false narratives. Cyber-attacks targeting INEC’s infrastructure—especially the BVAS machines—are another part of the arsenal designed to undermine electoral legitimacy.

Advanced AI systems, including autonomous cyber attack tools, may also be deployed to deny, disrupt, or degrade election systems, making them targets of continuous, anonymous assault. The complexity of attribution makes holding perpetrators accountable exceedingly difficult.

Nigeria’s vulnerability

With over 30 million social media users and a digitally literate youth population, Nigeria provides an ideal fertile ground for deepfake campaigns. In regions with limited traditional media reach, WhatsApp, Facebook, TikTok, and X are the main news sources—channels where deepfakes and disinformation can spread unchecked.

Moreover, Nigerians are distributed across urban and rural areas, and nearly half the electorate being youth, means a single viral deepfake clip can influence millions in hours or minutes. That’s disruptive power that politics could exploit at will.

Responding to the threat

1. Public Awareness & Digital Literacy

Building understanding of deepfakes is crucial. Citizens must be taught to question digital content and verify sources. Awareness campaigns, perhaps led by INEC, civil society, and telecoms, can empower voters to spot misinformation before it spreads.

2. Fact‑Checking & Tech Solutions

Fact-checking bodies—such as Dubawa, Africa Check, and FactCheckHub—are already on the frontlines combating misinformation with AI tools. Expanding their reach, integrating real-time AI detectors into media workflows, and supporting journalists with synthetic content training are steps in the right direction.

3. Regulatory Framework

Nigeria lacks strong regulation around AI and deepfake misuse. The National Assembly needs to pass laws penalising malicious AI-driven political content and mandate transparency, watermarking, and digital origin tags on political multimedia.

4. Election Infrastructure Security

INEC must harden its tech stack: shield BVAS systems, secure databases, and develop contingency plans. Previous hiccups—like the IReV failure in 2023—must inform improvements. INEC’s embrace of AI shouldn’t just be aspirational; it must be bulletproof.

The bottom line

The Growing Deepfake Risks Ahead of 2027 Elections in Nigeria are real—and now visible on the horizon. The convergence of emerging AI tools, high social media usage, and a polarised political environment creates a perfect storm. But this doesn’t mean defeat is inevitable.

Nigeria has the advantage of an engaged youth population, active fact‑checking networks, and a robust civil society. With coordinated efforts—public education, fact‑checking backed by AI, cybersecurity, and legislation—we can build a resilient information ecosystem capable of resisting deepfake-fueled political chaos.

In 2027, the election won’t only be won or lost at polling units. It will be decided in digital spaces—on phones, apps, and timelines. Nigeria’s democracy depends on winning that front.

Join Our Social Media Channels:

WhatsApp: NaijaEyes

Facebook: NaijaEyes

Twitter: NaijaEyes

Instagram: NaijaEyes

TikTok: NaijaEyes